I am continuing my series of talks on fundamental topics like data types. I am proud to be a United States Army Reservist (USAR) Veteran.

Just like boot camp I went to so long ago, I am going nick name the series BASIC TRAINING.

The most basic unit of any database is a TABLE which consists of COLUMNS. A vital decision during the initial database design is to choose the data types that will capture the information you want in the least amount of space.

Today, I am exploring unicode character strings which can be fixed or variable length in size.

I am extenting the sample database named [BASIC] that contains a sample schema named [TRAINING]. The snippet below creates a sample table named [UNICODE_STRINGS] that contains one or more fields for each data type.

Books online describes two data types that are categorized as unicode character strings: NCHAR(N) is defined as fixed length of N and NVARCHAR(N) is defined as variable length from zero to N. Overall, it takes two bytes to represent a single ASCII character. The overhead for NVARCHAR is 2 additional bytes for length.

In SQL Server versions prior to 2005, N was defined from 0 to 4000 so that the record could fit on a single data page. The MAX key word was introduced in SQL Server 2005 in preparation for removing the NTEXT data type in the future. Both NVARCHAR(MAX) and NTEXT can hold textual data up to 1 GB in size.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

<span style="color: #008000; font-size: small;">-- -- Create test tables (unicode character strings) -- -- Delete existing table IF EXISTS ( SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[TRAINING].[UNICODE_STRINGS]') AND type in (N'U')) DROP TABLE [TRAINING].[UNICODE_STRINGS] GO -- Create new table CREATE TABLE [TRAINING].[UNICODE_STRINGS] ( US1 NCHAR(8), -- 16 BYTES US2 NVARCHAR(8) -- DATA SIZE (1..8) * 2 + 2 BYTES OVERHEAD ); GO </span> |

We should test the newly crafted table by inserting data. The first test below will work since all columns are less than or eqaul to eight characters. The second test will fail since truncation will occur due to constant being greater than the field size.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

<span style="color: #008000; font-size: small;">-- Test data set 1 -- Test data set 1 INSERT INTO [TRAINING].[UNICODE_STRINGS] VALUES ( '12345678', 'FIT IN 8' ); GO -- Test data set 2 INSERT INTO [TRAINING].[UNICODE_STRINGS] VALUES ( '11223344', 'DOES NOT FIT IN 8' ); GO </span> |

We can tell the database engine to ignore the truncation warning by using the SET command.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

<span style="color: #008000; font-size: small;">-- Allow truncation to occur SET ANSI_WARNINGS OFF; -- Test data set 2 INSERT INTO [TRAINING].[UNICODE_STRINGS] VALUES ( '11223344', 'DOES NOT FIT IN 8' ); GO -- Return the data from the table SELECT * FROM [TRAINING].[UNICODE_STRINGS] GO </span> |

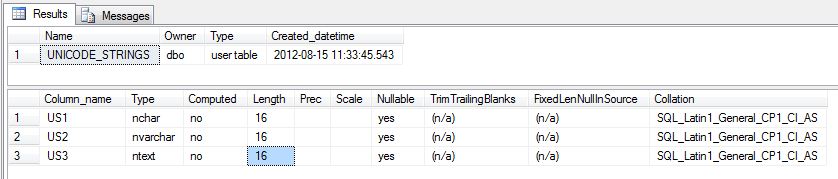

I am going to alter the table definition to experiment with NTEXT data type. By default, data for NTEXT is stored out of row in seperate data pages.

|

1 2 3 4 5 6 7 8 9 10 |

<span style="color: #008000; font-size: small;">-- Add a text type to table ALTER TABLE [TRAINING].[UNICODE_STRINGS] ADD US3 NTEXT; GO -- Update first row with data UPDATE [TRAINING].[UNICODE_STRINGS] SET US3 = 'This is the normal out of row storage with 2 ^ 30 bytes.' WHERE US1 = '12345678'; GO </span> |

The sp_tableoption stored procedure can be used to force the storage of NTEXT data in row. The default for this setting is to store any string that is 256 bytes or less in row. Again, this stored procedure is slated to be discontinued in the future. It is suggested that you use NVARCHAR(MAX) instead.

|

1 2 3 4 5 6 7 8 |

<span style="color: #008000; font-size: small;">-- By default, 256 bytes are stored in row when on EXEC sp_tableoption 'TRAINING.UNICODE_STRINGS', 'text in row', 'ON'; -- Update first row with data UPDATE [TRAINING].[UNICODE_STRINGS] SET CS3 = 'IN ROW STORAGE' WHERE CS1 = '11223344'; </span> |

As a database designer, you should always question the components that make up your database. One question that you might have is ‘What is the maximum number of bytes that a row can have?’.

This is important because data is stored in the *.MDF or *.NDF files as pages ~ 8k. Since a page can only save 8060 bytes, you can figure out how many records can fit on a page and how many bytes are wasted space.

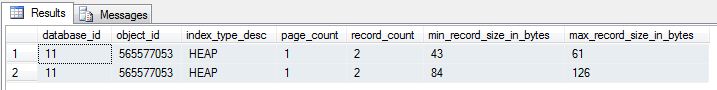

The following code uses the dynamic management view, sys.dm_db_index_physical_stats, to count the number of fields and calculate the maximum row size.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

<span style="color: #008000; font-size: small;"> -- Valid for SQL Server 2005 + SELECT s.database_id, s.object_id, s.index_type_desc, s.page_count, s.record_count, s.min_record_size_in_bytes, s.max_record_size_in_bytes FROM sys.dm_db_index_physical_stats (DB_ID(N'BASIC'), OBJECT_ID(N'TRAINING.UNICODE_STRINGS'), NULL, NULL , 'DETAILED') AS s; GO </span> |

The sp_help stored procedure displays the details of the table. This includes many different settings that can be choosen as a DDL designer such as computed column, field length, nullabilty, and collation to mention a few.

|

1 2 3 |

<span style="color: #008000; font-size: small;">-- Display size details of table EXEC sp_help 'TRAINING.UNICODE_STRINGS'; </span> |

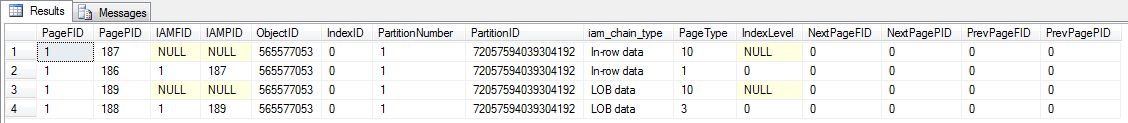

You might have noticed that the index physical statistics DMV displayed two heap pages and three total records. How can we have a total of three records when we did two inserts and two updates? The answer is the out of row storage for BLOBS.

The undocumented DBCC IND command will show the pages being used by the table or HEAP.

|

1 2 3 |

<span style="color: #008000; font-size: small;">-- Show pages of table DBCC IND('BASIC', 'TRAINING.UNICODE_STRINGS', -1); </span> |

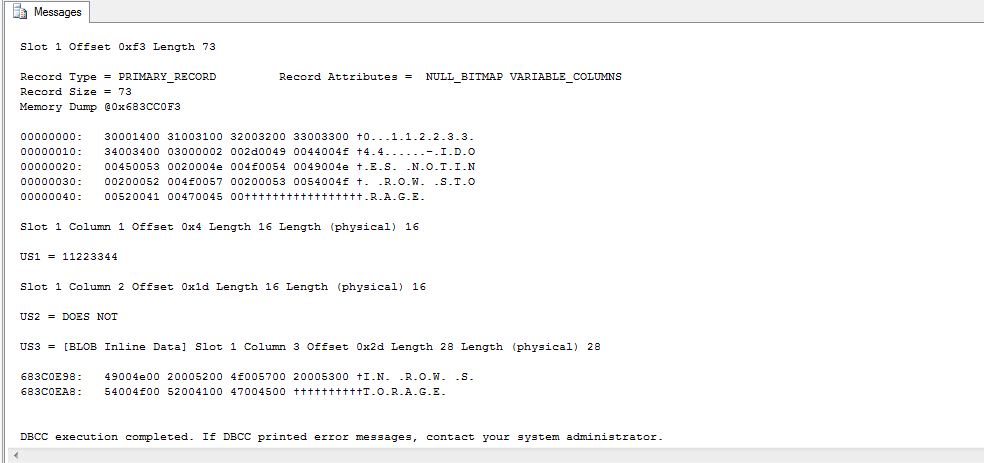

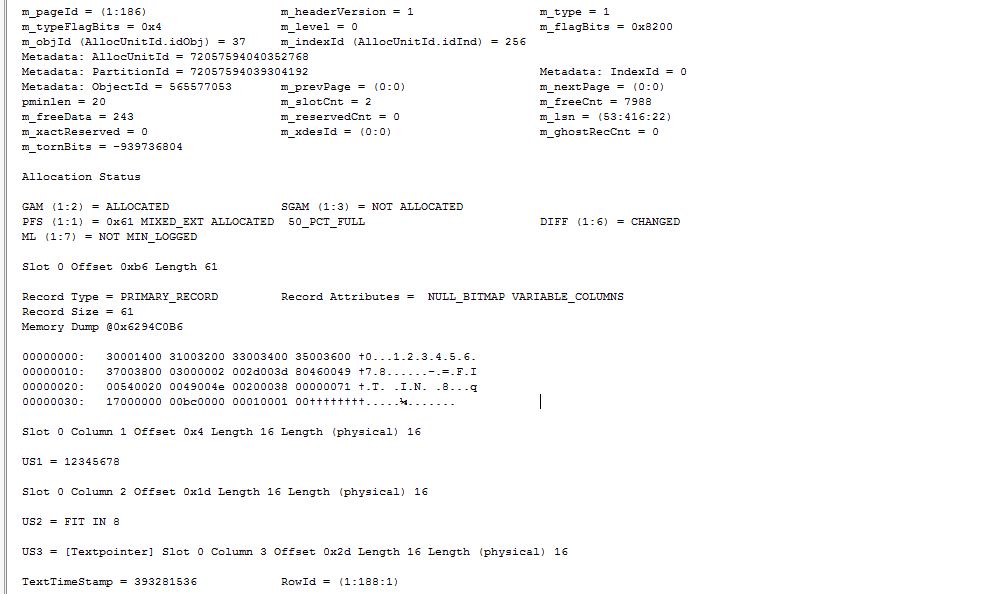

The DBCC TRACEON flag 3604 will dump pages to the output window. The DBCC page command will dump the contents of each page. The DBCC TRACEOFF flag 3604 will restore the flag to its orginal setting.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

<span style="color: #008000; font-size: small;">-- Turn on output DBCC TRACEON(3604) GO -- Dump data page (2 records) DBCC page('BASIC', 1, 186, 3); GO -- Dump page (out of row data) DBCC page('BASIC', 1, 188, 3); GO -- Turn off output DBCC TRACEOFF(3604) GO </span> |

The in row data page entry shows the actual text.

The out of row data page entry shows a text pointer to the BLOB.

In summary, use the NVARCHAR data type when length of the data is double digits or more in size and various international characters are needed. While there is a two byte overhead for the datatype, the odds are the empty space reclaimed by the table will make up a total positive savings. Remember, the NTEXT data type is on the depreciated list. Use the NVARCHAR(MAX) instead.

Next time, I will be reviewing date and/or time data types.

Magnificent post, very informative. I wonder why the other specialists of this sector do not notice this. You must proceed your writing. I am confident, you’ve a huge readers’ base already!|What’s Taking place i’m new to this, I stumbled upon this I have found It absolutely helpful and it has aided me out loads. I’m hoping to contribute & aid different customers like its aided me. Good job.