Most of the time, the transaction log is your friend since it can be used for a point in time recovery. However, when deleting large amounts of data from a database, it can be your enemy. I will be reviewing THREE different TSQL patterns to delete data.

Most of the time, the transaction log is your friend since it can be used for a point in time recovery. However, when deleting large amounts of data from a database, it can be your enemy. I will be reviewing THREE different TSQL patterns to delete data.

Today, we will be using the MATH database that contains prime numbers from 2 to 6 million. I will post the actual files on this article so that they can be detached and reattached as needed for each test.

We need to remove the foreign key constraint before we can delete data from the primary table we are deleting from – [TBL_PRIMES].

|

1 2 3 4 5 6 7 8 |

<span style="color: #008000; font-size: small;">-- Select correct db USE [MATH] GO -- Remove unwanted constraint ALTER TABLE [dbo].[TBL_TOP_500_PRIMES] DROP CONSTRAINT [FK_TBL_PRIMES] GO </span> |

The FIRST design pattern is good for small amounts of data. On the other hand, it will lead to rampart log growth if you are not careful. Since the delete occurs inside a transaction, it can be rolled back if we do not remove the correct amount of data. This is great for preventing unwanted deletions.

Algorithm

Get count of matching records.

BEGIN TRAN (start a T-SQL transaction).

DELETE matching records.

COMMIT or ROLLBACK transaction.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

<span style="color: #008000; font-size: small;">-- -- Delete inside a transaction -- -- Get before info sp_helpdb [MATH] GO -- Get count of records SELECT COUNT(*) AS Total FROM [MATH].[dbo].[TBL_PRIMES]; GO -- Use transaction 2 delete BEGIN TRAN; DELETE FROM [MATH].[dbo].[TBL_PRIMES]; -- ROLLBACK; -- COMMIT; -- Get after info sp_helpdb MATH GO </span> |

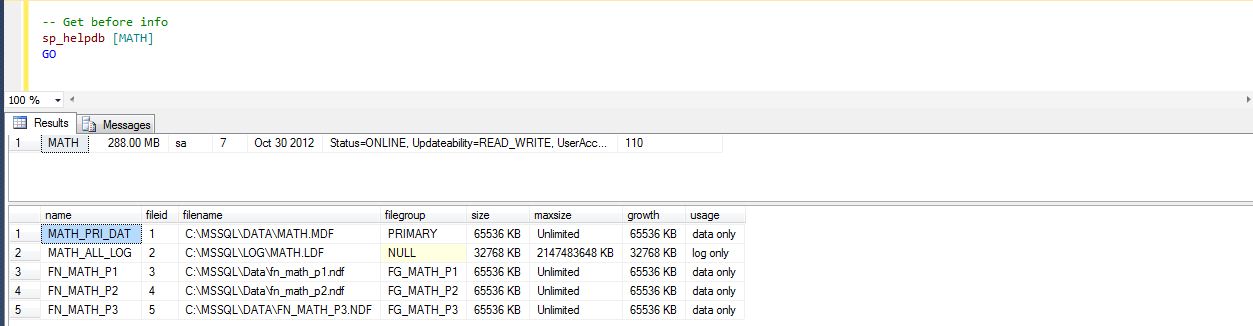

The before image of the database shows a transaction log file size of 32 MB.

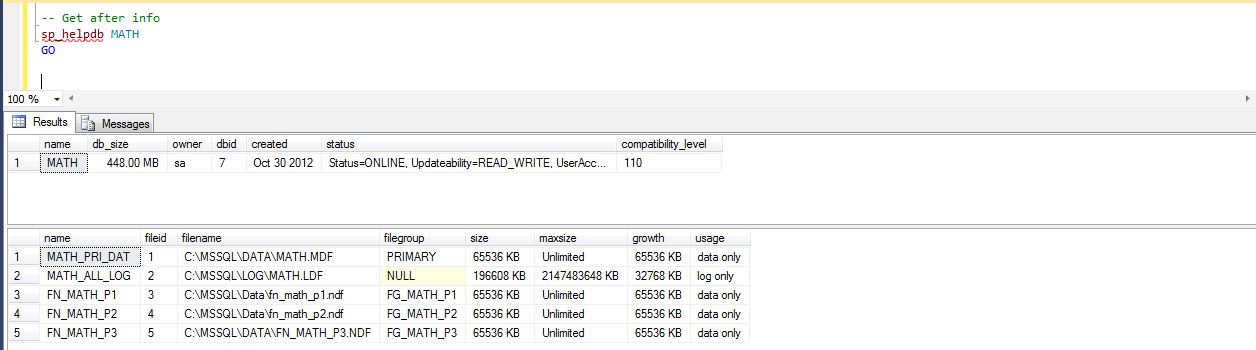

The after image of the database shows a transaction log file size of 196 MB.

The SECOND design pattern is great for large amounts of data. It uses the fact that the transaction log will be truncation upon a backup. This applies to both the FULL and BULK LOGGED recovery models. A while loop is used to delete 10 K of records per batch until there a no more records to delete.

Algorithm

Make sure we are not in SIMPLE recovery mode.

Get count of matching records.

WHILE there are matching records.

{

DELETE matching records in 10 K batch.

BACKUP LOG to disk (truncate log)

}

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

<span style="color: #008000; font-size: small;">-- -- Delete in batches w/ log backup in FULL recovery mode -- -- Select correct db USE [MATH] GO -- Set to full mode ALTER DATABASE [MATH] SET RECOVERY FULL; GO -- Get count of records SELECT COUNT(*) AS Total FROM [MATH].[dbo].[TBL_PRIMES]; GO -- Delete in batches DECLARE @VAR_ROWS INT = 1; DECLARE @VAR_CNT INT = 1; DECLARE @VAR_FILE VARCHAR(128) = ''; WHILE (@VAR_ROWS > 0) BEGIN DELETE TOP (10000) FROM [MATH].[dbo].[TBL_PRIMES]; SET @VAR_ROWS = @@ROWCOUNT; SET @VAR_FILE = 'C:\MSSQL\BACKUP\MATH-' + REPLACE(STR(@VAR_CNT, 3, 0), ' ', '0') + '.TRN'; BACKUP LOG [MATH] TO DISK = @VAR_FILE; SET @VAR_CNT = @VAR_CNT + 1; END; GO </span> |

The THIRD and last design pattern is exceptional for large amounts of data. Since we are putting the database into a simple recovery model, I suggest performing a full back up before and after the deletion.

Algorithm

Make sure we are in SIMPLE recovery mode.

Get count of matching records.

While there are matching records.

{

Delete matching records in 10 K batch.

Use checkpoint to truncate log.

}

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

<span style="color: #008000; font-size: small;">-- -- Delete in batches in SIMPLE recovery mode -- -- Select correct db USE [MATH] GO -- Set to simple mode ALTER DATABASE [MATH] SET RECOVERY SIMPLE; GO -- Get count of records SELECT COUNT(*) AS Total FROM [MATH].[dbo].[TBL_PRIMES]; GO -- Delete in batches DECLARE @VAR_ROWS INT = 1; WHILE (@VAR_ROWS > 0) BEGIN DELETE TOP (10000) FROM [MATH].[dbo].[TBL_PRIMES]; SET @VAR_ROWS = @@ROWCOUNT; CHECKPOINT; END; GO -- Set to full mode ALTER DATABASE [MATH] SET RECOVERY FULL; GO </span> |

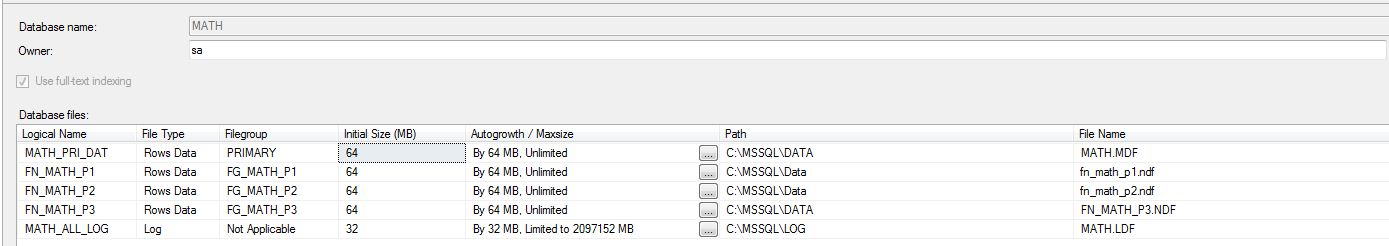

Both design patterns 2 & 3 do not growth the log file since it is truncated after each batch deletion. Please see database size from SQL Server Management Studio (SSMS), database properties window.

I purposely skipped talking about database snapshots. You can use them if you want added protection and quicker recovery from a bad delete statement. The down side of using them is to have disk space equal to or larger than the data you are deleting.

In summary, be careful when deleting data. Use the BEGIN TRAN statement for added protection when doing small deletions. Be cognitive of managing transaction log file growth (BACKUP LOG or CHECKPOINT) during larger deletions. Use DATABASE SNAPSHOTS for added protection if have the processing power and space capacity on your server.

Best wishes on clearing your database of unwanted records.